Any developer wants to see the code changes instantaneously reflected in the application server.

However, when using WebSphere Application Server (WAS), developers usually have to go through the process of deploying an application to the server. Even though the deployment support is integrated into Rational Application Developer (RAD) or Eclipse WTP, it still introduces delays and impedes productivity. Not to mention that Eclipse WTP does not actually support WAS 6.1 runtimes, only 6.0.

This is unfortunate because actually WAS 6.1 has good support for dynamic reloading. With dynamic reloading turned on, WAS monitors changes on the file system and automatically reloads the module (i.e., all classes loaded by the module’s classloader) when it detects a change. The reloading is almost instantaneous for simple modules. For complex modules with a lot of classes or initialization logic the reloading step could take a little bit of time but it is still faster than redeploying an entire application (you should check out Java Rebel if you want a truly instantaneous deployment).

With dynamic reloading all we need to do in order to make our changes available to the server is to update class files in the location where the deployed application resides. This is especially straightforward for web application and classes under WEB-INF/classes since WAS always explodes web application archives during deployment. In case of jar files (say the ones under WEB-INF/lib) the situation is a more complicated.

Unfortunately, the location of the deployed application is usually different from the workspace where a developer makes changes. By default, deployed binaries are located under profile_root/installedApps/cell_name. While this location can be changed, the directory structure will still be somewhat different from how code is organized in the workspace.

We could write a simple Ant script to copy changes, but this again introduces a special “pseudo-deployment” step. It would be nice if we could simply make a change in Eclipse, save it and let dynamic reloading kick in without any extra steps.

Turns out that it is quite possible to make WAS and Eclipse behave this way.

First, let’s configure WAS:

* Log in to WAS admin console and make sure that “Run in development mode” is checked for your sever. This is the default for standalone installations.

* Deploy your application to WAS using WAS admin console.

* For convenience, you may want to specify a non-standard location for application binaries during installation to shorten the path, e.g., “was_installed_apps”. This step is optional.

* Go to “Enterprise applications/your_app/Class loading and update detection”.

* Make sure that “reload classes” is checked.

* Set reload interval to some reasonable number, say “3”. By default it’s set to “0” which means “never”. IBM recommends 3 seconds as an optimal interval, although I’ve been using 1 second without any issues (for relatively small modules though).

* Stop and start the application.

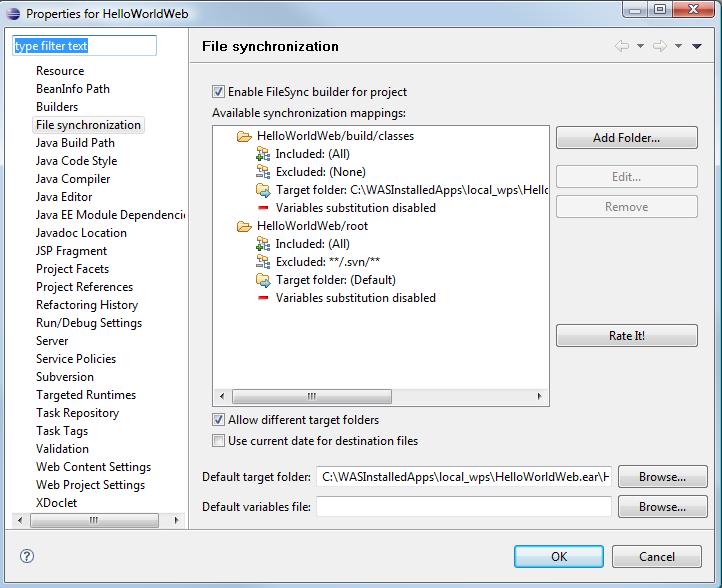

Now let’s configure Eclipse. We will have to create a resource link pointing to the deployed application and configure the project to compile classes to the deployed location.

* Go to “Java Build Path” of the project. Click on “Browse” next to “Default output folder”.

* Click “Create New Folder…”, “Advanced”, check “Link to folder in the file system”.

* Click on “Browse” and locate the root of the exploded WAR file in the deployed application location. For example, for application “HelloWorldWeb” the path will be “profile_root/installedApps/cell_name/HelloWorldWeb.ear/HelloWorldWeb.war”. Give the link a meaningful name, e.g., “deployment”. Note: if you share .project and .classpath files with other developers, use Eclipse variables instead of the absolute path.

* Click OK. This will create a resource link that you can use to specify the output folder.

* Change the output folder to point to “project_name/link_name/WEB_INF/classes”, e.g., “HelloWorldWeb/deployment/WEB-INF/classes”. Click OK.

* Eclipse will recompile your project.

* From this point forward any class change will trigger dynamic reloading on the server.

* The resource link is also available in your package explorer, so you can browse and edit the deployed files. You need to be careful if you want to edit JSPs or other files that way as they will be overridden by the next full re-deployment.

This techniques takes care of class files only. Dynamic reloading of JSP files is a different story.

Note: This has been tested only with Eclipse 3.4 and WAS 6.1 and on modules with a relatively small code base. I’d be curious to know how effective this approach is for large modules.

This post is part of the series on WebSphere Application Server administration. Please subscribe to our blog if you’d like to receive updates.

Note: We offer professional services in the area of WebSphere architecture, implementation and operations. If you’re looking for help with any of these tasks, please let us know.